Watch the new Fujitsu fi-800R in action. We review the scanner operation and functions.

Blog

Unboxing the Fujitsu fi-800R

We got the new Fujitsu fi-800R and we’re unboxing it.

Beyond Traditional Data Capture – Machine Learning and Natural Language Processing

Many companies have been using Data Capture applications for years. They work really well and do exactly as they say they do on the ‘tin’. Identification, extraction and verification are all standard fare for these systems. Pushing the data into other Business systems is normal and efficient.

But what’s happening next?

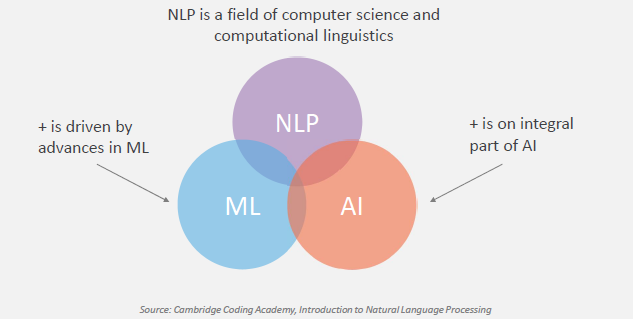

The ‘buzz’ words for the last few years has been Natural Language Processing (NLP) and Machine Learning (ML). Both of these technologies have been slow to build. In the early stages, they were somewhat clumsy and difficult to setup. They were designed with a very narrow focus of certain types of documents. The results had a certain ‘wow’ factor but it was difficult to put that into a production application. With evolution that has gradually changed.

Amazon have pushed ML hard and are now closing the loop with NLP. The ‘pay per use’ model is easy to understand and cost, leading to a take up with developers and a growing community that has real world applications.

Microsoft have long touted a NLP engine. The MSR-NLP research group are looking at real world areas not related to document and data capture. ML at Microsoft is now integrated into the .NET stack. Developers can use the ML engine and build applications on it. Azure is a fertile ground for deploying a ML engine allowing developers to create new instances that can process specific data sets and produce an output for consumption. We’ll see what they do next. Both approaches by Microsoft have little focus on data extraction from documents and more about data analytics and data mining.

Data Capture powerhouses in the document imaging world are now focused on solutions targeted at documents and data extraction. ABBYY has now integrated ML and NLP into the base data capture system. It has evolved the technology to a point where the setup is logical and the engine testing is open enough for engineers to deploy. All of the tools are available for ingestion and output.

Using the existing data capture engine and including the NLP and ML engines, ABBYY now have an offering that can capture data across nearly all styles of document – structured, semi-structured and unstructured documents. This opens up new areas that were traditionally more of a challenge – Real Estate & Medical are prime examples. Using NLP, emails are now an area of data extraction that can be included.

There are some limitations. Handwriting is still a bad area to expect results. Poor quality documents are also showing low quality results. Languages are still not fully supported – the way that languages differ in semantic is still an issue for the NLP – each language is trained specifically. Tables and lists are not good candidates for NLP as are areas were text is not surrounded by other text. Most of these can be addressed using traditional techniques that are tried and tested over time.

Using ABBYY’s engine, iKAN is constantly reviewing our customers needs and looking at new areas that this technology can be applied. Our Virtual Document Solution platform is fully integrated into ABBYY FlexiCapture allowing iKAN to bring all of the efficiency and quality that have long been a key differentiation of ABBYY. Our Cloud offering is an excellent way to easily deploy a solution in a short period of time, with lower costs than an ‘On Premise’ solution. We can typically get a system up and running in a few days, giving you real output to review and integrate into your internal systems.

Contact us for a non sales, informal chat!